Gerrit Broekhuis

Programmer

Hi,

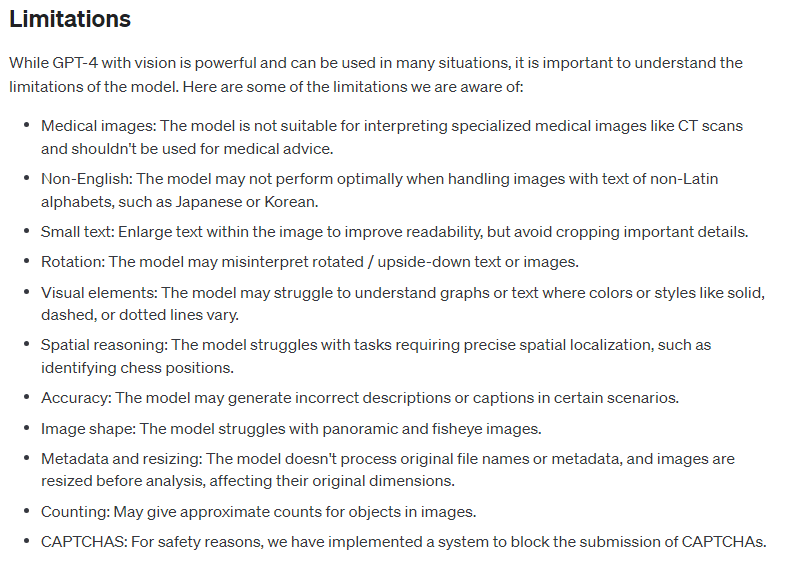

OPENAI’s “GPT-4 Turbo with Vision” should be able to perform OCR.

With help from VFP forum members here and elsewhere I’ve been able to create textproposals and use speechrecognition in our applications.

For OCR we’re now using Tesseract, but I think GPT-4 may be a good alternative.

Has anyone tried this already? Any hints or tips?

Regards, Gerrit

OPENAI’s “GPT-4 Turbo with Vision” should be able to perform OCR.

With help from VFP forum members here and elsewhere I’ve been able to create textproposals and use speechrecognition in our applications.

For OCR we’re now using Tesseract, but I think GPT-4 may be a good alternative.

Has anyone tried this already? Any hints or tips?

Regards, Gerrit